Web Scraping (also termed Screen Scraping, Web Data Extraction, Web Harvesting etc.) is a technique employed to extract large amounts of data from websites whereby the data is extracted and saved to a local file in your computer or to a database in table (spreadsheet) format. Python web web-scraping beautifulsoup youtube. Follow asked 4 mins ago. Spring93 Spring93. 13 2 2 bronze badges. Can you add a test call to your function to make this a minimally, reproducable example – Tasty213 1 min ago. Add a comment Active Oldest Votes.

- Web Scraping Youtube Python

- Web Scraping Tools

- Web Scraping Tutorial

- Web Scraping Applications

- Web Scraping With Python

- Youtube Web Scraping Api

- Web Scraping Service

support@webharvy.com | sales@webharvy.com | YouTube Channel | KB Articles

Learn how to perform web scraping with Python using the Beautiful Soup library. ️ Tutorial by JimShapedCoding. Check out his YouTube Channel:https://www.yout. Scrape the YouTube videos. Right-click on any video link, then click Scrape Similar. Double-check that it has scraped all the videos, then Export to Google Docs. Clean up your URLs and save to text file. In your Google Doc, add onto all the URLs.

Product Help

YouTube Channel

WebHarvy Blog

Web Scraping (also termed Screen Scraping, Web Data Extraction, Web Harvesting etc.) is a technique employed to extract large amounts of data from websites whereby the data is extracted and saved to a local file in your computer or to a database in table (spreadsheet) format.

Data displayed by most websites can only be viewed using a web browser. They do not offer the functionality to save a copy of this data for personal use. The only option then is to manually copy and paste the data - a very tedious job which can take many hours or sometimes days to complete. Web Scraping is the technique of automating this process, so that instead of manually copying the data from websites, the Web Scraping software will perform the same task within a fraction of the time.

A web scraping software will automatically load and extract data from multiple pages of websites based on your requirement. It is either custom built for a specific website or is one which can be configured to work with any website. With the click of a button you can easily save the data available in the website to a file in your computer.

Practical Usage Scenarios

- 1. Extract product details including price, images etc. from eCommerce websites for populating other websites, competition monitoring etc.

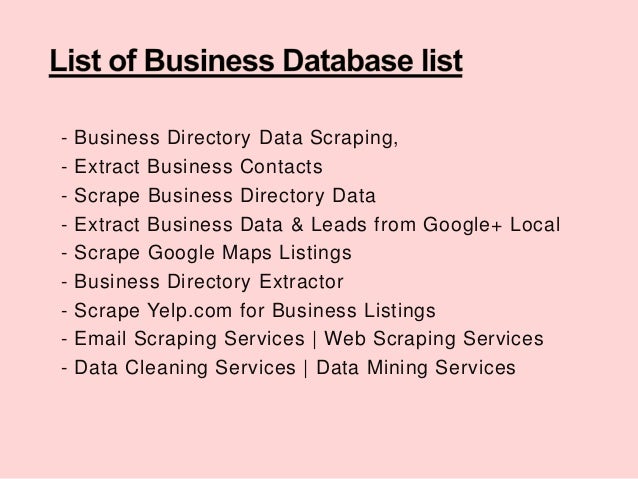

- 2. Extract business contact details including name, address, email, phone, website etc. from Yellow Pages, Google Maps etc. for marketing and lead generation.

- 3. Extract property details as well as agent contact details from real estate websites.

Methods of Web Scraping

- 1. Using software

- 2. Writing code

Web Scraping software falls under 2 categories. First, which can be locally installed in your computer and second, which runs in cloud - browser based. WebHarvy, OutWit Hub, Visual Web Ripper etc. are examples of web scraping software which can be installed in your computer, whereas import.io, Mozenda etc. are examples of cloud data extraction platforms.

You can hire a developer to build custom data extraction software for your specific requirement. The developer can in-turn make use of web scraping APIs which helps him/her develop the software easily. For example apify.com lets you easily get APIs to scrape data from any website.

Our Solution

The problem with most generic web scraping software is that they are very difficult to setup and use. There is a steep learning curve involved. WebHarvy was designed to solve this problem. With a very intuitive, point and click interface, WebHarvy allows you to start extracting data within minutes from any website.

Please watch the following demonstration which shows how easy it is to configure and use WebHarvy for your data extraction needs.

As shown in the video above, WebHarvy is a point and click web scraper (visual web scraper) which lets you scrape data from websites with ease. Unlike most other web scraper software, WebHarvy can be configured to extract the required data from websites with mouse clicks. You just need to select the data to be extracted by pointing the mouse. Yes, it is that easy !. We recommend that you try the evaluation version of WebHarvy or see the video demo.

Keywords: Web Scraper, Screen Scraper, Data Extraction, Web Scraping Software, Screen Scraping, Web Harvesting

Since its inception, websites are used to share information. Whether it is a Wikipedia article, YouTube channel, Instagram account, or a Twitter handle. They all are packed with interesting data that is available for everyone with access to the internet and a web browser.

But, what if we want to get any specific data programmatically?

There are two ways to do that:-

- Using official API

- Web Scraping

The concept of API (Application Programming Interface) was introduced to exchange data between different systems in a standard way. But, most of the time, website owners don’t provide any API. In that case, we are only left with the possibility to extract the data using web scraping.

Basically, every web page is returned from the server in an HTML format. Meaning that our actual data is nicely packed inside HTML elements. It makes the whole process of retrieving specific data very easy and straightforward.

This tutorial will be an ultimate guide for you to learn web scraping using Python programming language. At first, I’ll walk you through some basic examples to make you familiar with web scraping. Later on, we’ll use that knowledge to extract data of football matches from Livescore.cz.

Web Scraping Youtube Python

Without any further ado, let’s follow along with me.

Getting Started

To get us started you will need to start a new Python3 project with and install Scrapy (a web scraping and web crawling library for Python). I’m using pipenv for this tutorial, but you can use pip and venv, or conda.

At this point, you have Scrapy, but you still need to create a new web scraping project, and for that scrapy provides us with a command line that does the work for us.

Let’s now create a new project named web_scraper by using the scrapy cli.

If you are using pipenv like me, use:

otherwise, from your virtual environment using

This will create a basic project in the current directory with the following structure:

Building our first Spider with XPath queries

We will start our web scraping tutorial with a very simple example. At first, we’ll locate the logo of the Live Code Stream website inside HTML. And as we know it is just a text and not an image, so we’ll simply extract this text.

The code

To get started we need to create a new spider for this project. We can do that by either creating a new file or using the CLI.

Since we know already the code we need we will create a new Python file on this path /web_scraper/spiders/live_code_stream.py

Here are the contents of this file.

Code explanation:

First of all, we imported the Scrapy library. It is because we need its functionality to create a Python web spider. This spider will then be used to crawl the specified website and extract useful information from it.

We created a class and named it

LiveCodeStreamSpider. Basically, it inherits fromscrapy.Spiderthat’s why we passed it as a parameter.Now, an important step is to define a unique name for your spider using a variable called

name. Remember that you are not allowed to use the name of an existing spider. Similarly, you can not use this name to create new spiders. It must be unique throughout this project.After that, we passed the website URL using the

start_urlslist.Finally, create a method called

parse()that will locate the logo inside HTML code and extract its text. In Scrapy, there are two methods to find HTML elements inside source code. These are mentioned below.- CSS

- XPath

You can even use some external libraries like BeautifulSoup and lxml. But, for this example, we’ve used XPath.

A quick way to determine the XPath of any HTML element is to open it inside the Chrome DevTools. Now, simply right-click on the HTML code of that element, hover the mouse cursor over “Copy” inside the popup menu that just appeared. Finally, click the “Copy XPath” menu item.

Have a look at the below screenshot to understand it better.

By the way, I used

/text()after the actual XPath of the element to only retrieve the text from that element instead of the full element code.

Note: You’re not allowed to use any other name for the variable, list, or function as mentioned above. These names are pre-defined in Scrapy library. So, you must use them as it is. Otherwise, the program will not work as intended.

Run the Spider:

As we are already inside the web_scraper folder in command prompt. Let’s execute our spider and fill the result inside a new file lcs.json using the below code. Yes, the result we get will be well-structured using JSON format.

Web Scraping Tools

Results:

When the above code executes, we’ll see a new file lcs.json in our project folder.

Here are the contents of this file.

Another Spider with CSS query selectors

Most of us love sports, and when it comes to Football, it is my personal favorite.

Football tournaments are organized frequently throughout the world. There are several websites that provide a live feed of match results while they are being played. But, most of these websites don’t offer any official API.

In turn, it creates an opportunity for us to use our web scraping skills and extract meaningful information by directly scraping their website.

For example, let’s have a look at Livescore.cz website.

On their home page, they have nicely displayed tournaments and their matches that will be played today (the date when you visit the website).

We can retrieve information like:

- Tournament Name

- Match Time

- Team 1 Name (e.g. Country, Football Club, etc.)

- Team 1 Goals

- Team 2 Name (e.g. Country, Football Club, etc.)

- Team 2 Goals

- etc.

In our code example, we will be extracting tournament names that have matches today.

Web Scraping Tutorial

The code

Let’s create a new spider in our project to retrieve the tournament names. I’ll name this file as livescore_t.py

Here is the code that you need to enter inside /web_scraper/web_scraper/spiders/livescore_t.py

Code explanation:

Web Scraping Applications

As usual, import Scrapy.

Create a class that inherits the properties and functionality of scrapy.Spider.

Give a unique name to our spider. Here, I used

LiveScoreTas we will only be extracting the tournament names.The next step is to provide the URL of Livescore.cz.

At last, the

parse()function loop through all the matched elements that contains the tournament name and join it together usingyield. Finally, we receive all the tournament names that have matches today.A point to be noted is that this time I used CSS selector instead of XPath.

Run the newly created spider:

It’s time to see our spider in action. Run the below command to let the spider crawl the home page of Livescore.cz website. The web scraping result will then be added inside a new file called ls_t.json in JSON format.

By now you know the drill.

Results:

This is what our web spider has extracted on 18 November 2020 from Livescore.cz. Remember that the output may change every day.

Web Scraping With Python

A more advanced use case

In this section, instead of just retrieving the tournament name. We will go the next mile and get complete details of tournaments and their matches.

Create a new file inside /web_scraper/web_scraper/spiders/ and name it as livescore.py. Now, enter the below code in it.

Code explanation:

The code structure of this file is the same as our previous examples. Here, we just updated the parse() method with new functionality.

Basically, we extracted all the HTML <tr></tr> elements from the page. Then, we loop through them to find out whether it is a tournament or a match. If it is a tournament, we extracted its name. In the case of a match, we extracted its “time”, “state”, and “name and score of both teams”.

Youtube Web Scraping Api

Run the example:

Type the following command inside the console and execute it.

Results:

Here is a sample of what it’s retrieved:

Now with this data, we can do anything we want, like use it to train our own neural network to predict future games :p.

Conclusion

Data Analysts often use web scraping because it helps them in collecting data to predict the future. Similarly, businesses use it to extract emails from web pages as it is an effective way of lead generation. We can even use it to monitor the prices of products.

In other words, web scraping has many use cases and Python is completely capable to do that.

Web Scraping Service

So, what are you waiting for? Try scraping your favorite websites now.

Thanks for reading!